Our Akash Thesis

Publish Date: Jan 24, 2024

Author: Vincent Jow

Executive Summary

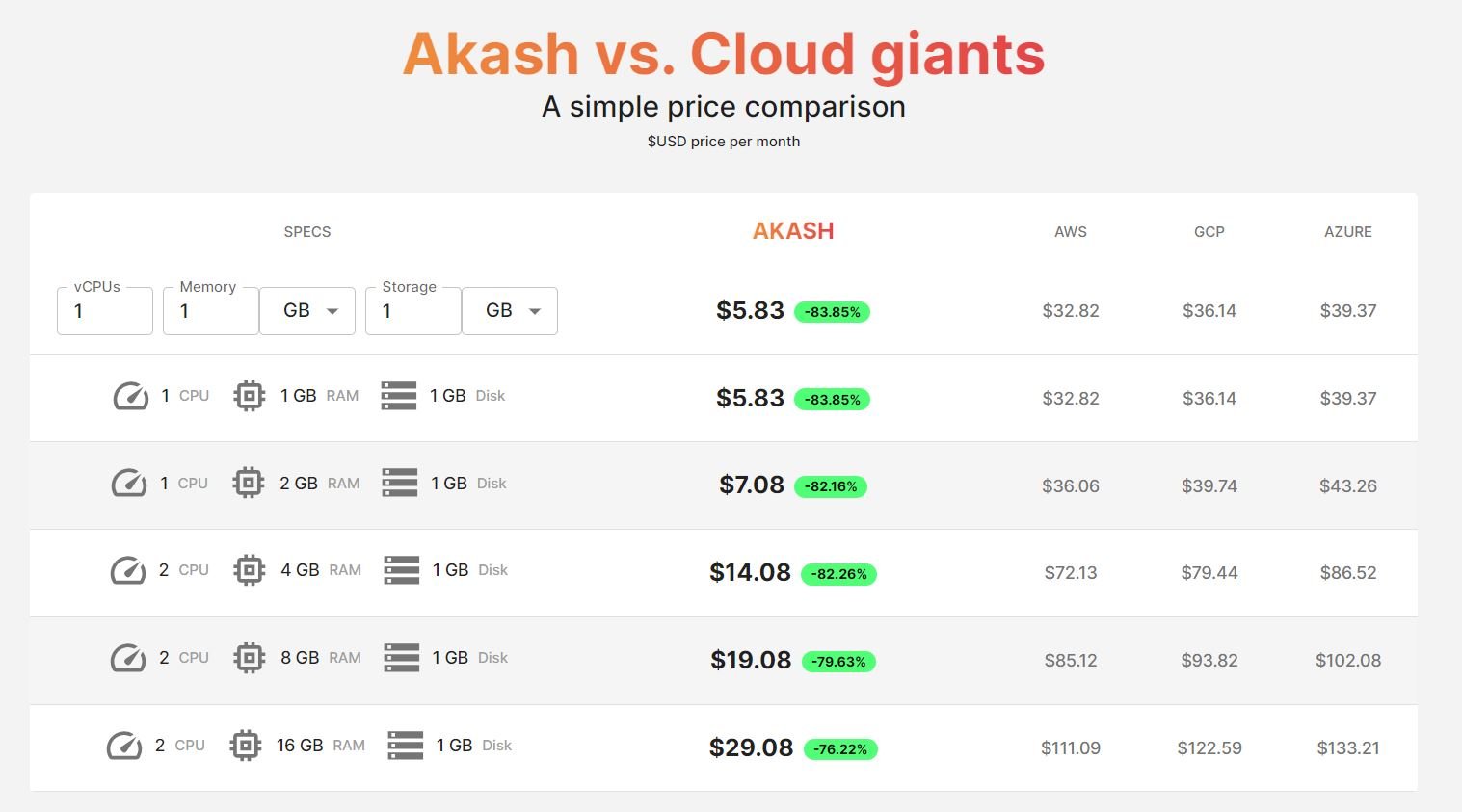

Akash is a decentralized compute marketplace that aims to connect underutilized GPU supply with users that need GPU compute, with the goal of becoming the “Airbnb” for GPU compute. Unlike other competitors, they are largely focused on general purpose, enterprise-grade GPU compute. Post their GPU mainnet launch in September 2023, they have gotten to 150-200 GPUs on their network reaching 50-70% utilization, annualizing at $500K-1M of GMV. In-line with internet marketplaces, Akash charges a 20% take rate on USDC payments.

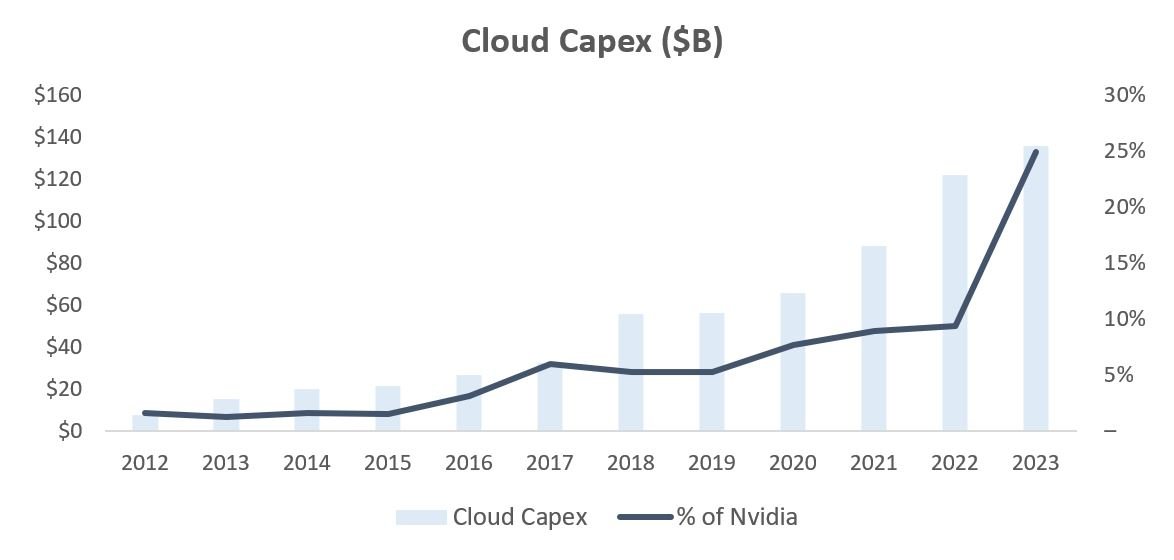

We are at the start of tectonic shift in infrastructure, with a shift to parallel processing driven by GPUs. Artificial intelligence is forecasted to add $7 trillion to GDP while automating away 300M jobs. Nvidia, which makes the GPUs that power these AI workloads, is forecasted to ramp revenues from $27B in 2022 to $60B in 2023 and to ~$100B in 2025. Cloud hyperscaler (AWS, GCP, Azure, etc.) capital expenditures on Nvidia chips have grown from mid-single digits to 25% today and are expected to reach 50%+ over the next few years. (Source: Koyfin)

Morgan Stanley estimates that the GPU Infrastructure-as-a-Service (IaaS) opportunity for hyperscalers will reach $40-50B by 2025. Illustratively, if 30% of GPU compute is resold through secondary marketplaces at a 30% discount, this would be a $10B revenue opportunity. Adding in another $5B revenue opportunity from non-hyperscaler sources, that would be $15B revenue opportunity. Assuming Akash is able to capture 33% market share of the opportunity ($5B of GMV) and at a 20% take rate, this would translate to $1B of net revenue. Applying a 10x multiple yields a nearly $10B market cap outcome. [1]

Market Overview

When OpenAI launched ChatGPT in November 2022, it set the record for the fastest growing user base, hitting 100 million users by January and 200 million users by May 2023. The ramifications were enormous, with estimates of a $7 trillion increase to GDP through productivity increases and automating away 300 million jobs.

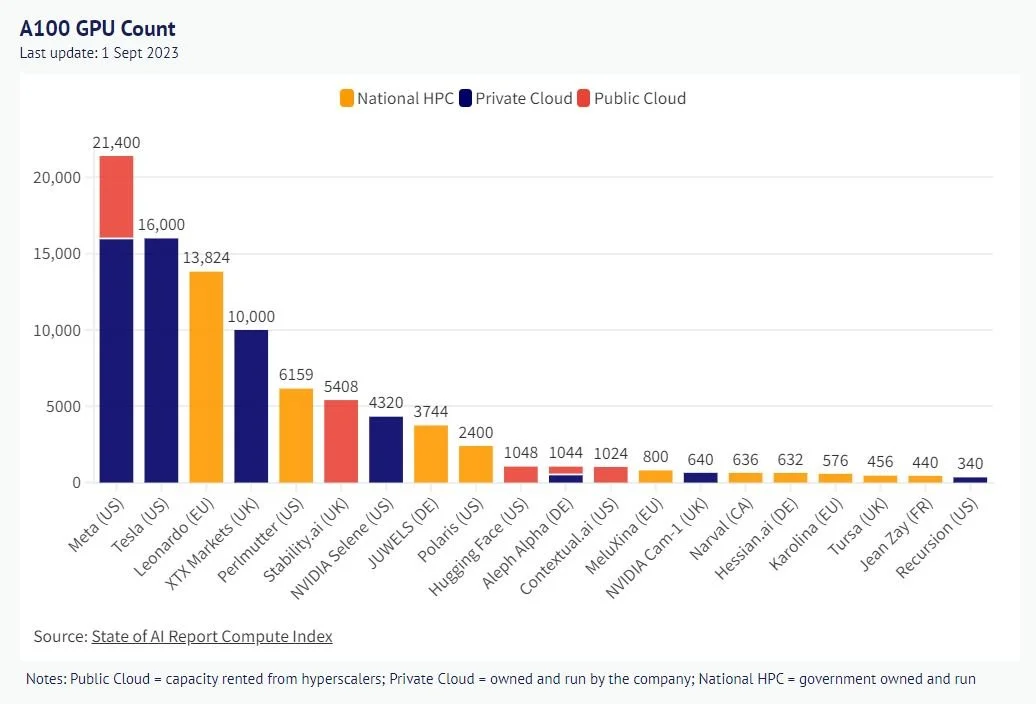

AI quickly emerged from a niche area of R&D into the biggest spending priority for companies. The cost of creating GPT-4 was $100M and cost $250M to run annually. GPT-5 required 25,000 A100 (equating to $225M of Nvidia hardware) and likely $1B of total hardware investment. This created an arms race among companies to secure enough GPUs to power AI-driven enterprise workloads.

Source: State of AI. Public Cloud = capacity rented from hyperscalers; Private Cloud = owned and run by the company; National HPC = government owned and run

This AI revolution has ushered in a tectonic shift in infrastructure, accelerating the move from CPUs to parallel processing with GPUs. Historically, GPUs have been used to render and process images simultaneously at scale while CPUs, which are designed to run serially, could not. With high memory bandwidth, GPUs evolved to tackle other calculations with parallel problems, such as training, refining and improving AI models.

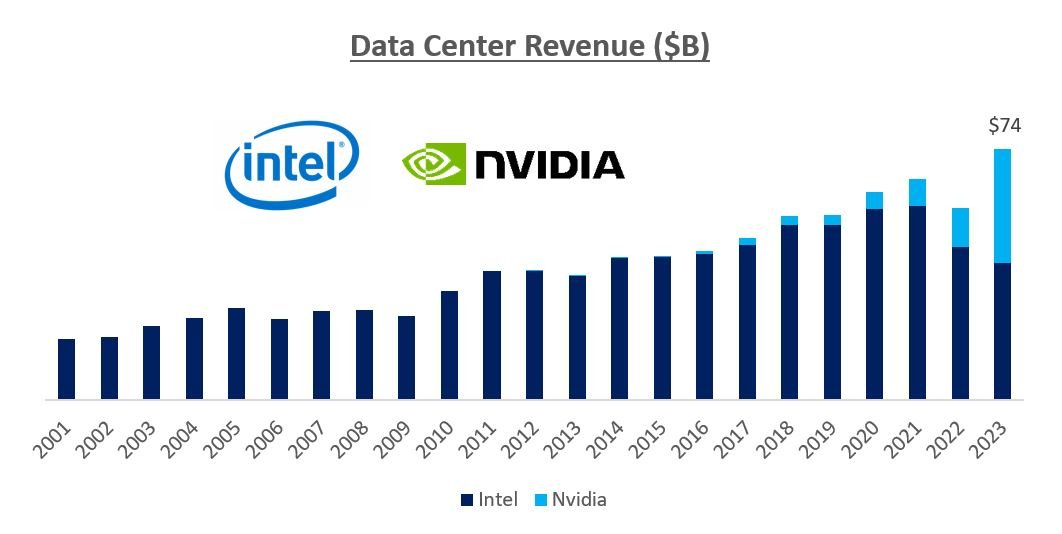

Nvidia, which pioneered GPUs in the 1990s, has combined best-in-class hardware with its CUDA software stack, building a multi-year lead versus the competition (largely AMD and Intel). Nvidia’s CUDA stack, developed in 2006, allows developers to optimize Nvidia GPUs to accelerate their workloads while streamlining GPU programming. There are 4M CUDA users and over 50K+ developers working on CUDA, with a robust ecosystem of programming languages, libraries, tooling, apps and frameworks. We expect Nvidia GPUs will flip Intel and AMD CPUs within the data center over time.

Source: Public filings and analyst estimates

The hyperscalers and big tech are rapidly spending more on Nvidia GPUs, ramping from low-single digit percentage of capex in early 2010’s to mid-to-single digit in 2015-2022 to 25% in 2023. We believe Nvidia will represent 50%+ of cloud capex spend in the next few years. This is expected to catalyze Nvidia’s revenue from $25B in 2022 to $100B by 2025 (Source: Koyfin).

Source: Public filings and analyst estimates

Morgan Stanley has sized the GPU IaaS opportunity for the hyperscalers at $40-50 billion by 2025. This is a still a fraction of total hyperscaler revenue, with the three largest hyperscalers doing ~$250B+ of revenue today.

Source: Public filings and analyst estimates

Given the strong demand for GPUs, there has been a massive supply shortage of GPUs, which has been well-documented by the New York Times and Wall Street Journal. AWS CEO has said “demand is outstripping supply, and that's true for everybody”. Elon Musk said on 2Q23 Telsa earnings call “We'll continue to use -- we'll actually take Nvidia hardware as fast as Nvidia will deliver it to us”. Index Ventures has resorted to buying chips for its portfolio companies. Outside of the major tech companies, it is nearly impossible to buy chips from Nvidia and there are long wait times to access chips from hyperscalers.

Below highlights the GPU pricing at AWS and Azure. As shown below, reserving for 1-3 years yields discounts of 30-65%. As hyperscalers are investing billions of capex ramping capacity, they are looking for revenue visibility to make those investments. Customers are better off paying for 1-year reserved pricing if they expect 60%+ utilization and 3-year if they expect 35%+ utilization. Any unused capacity that they could resell would dramatically reduce their overall total cost.

Source: Amazon Pricing. Azure Pricing

If hyperscalers build a $50B business leasing GPU compute, there will be a significant opportunity to resell unutilized compute. Assuming there is 30% capacity to resell at a 30% discount, that is a $10B market reselling hyperscaler GPU compute.

However, there are other sources of supply as well outside the hyperscalers, whether large enterprises (e.g. Meta, Tesla), private competitors (CoreWeave, Llambda, etc.) as well as well-funded AI startups. From 2022 to 2025, Nvidia would have generated ~$300 billion of revenue. Assuming there is $70B of chips outside of hyperscalers at a 20% capacity to resell at a 30% discount, that adds another $10B to the TAM, totaling $20 billion.

Akash Overview

Akash is a decentralized compute marketplace that was founded in 2015 and launched mainnet in September 2020 as a Cosmos app chain. The vision was to democratize cloud computing by offering underutilized compute priced significantly cheaper than the hyperscalers.

The blockchain handles coordination and settlement, storing records of requests, bids, leases and settlement, while execution is done off-chain. Akash hosts containers where users can run any cloud-native application. Akash is built with a set of cloud management services including Kubernetes to orchestrate and manage these containers. The deployment is transferred from a private peer to peer network isolated from the blockchain.

The first iteration of Akash was focused on CPU compute. At its peak, the business scaled to ~$200K annually of GMV and had 4-5K CPUs leased. However, the two largest pain points were onboarding (having to spin up a Cosmos wallet and pay for workloads in AKT tokens) and churn (had to fund a wallet with AKT and if AKT ran out or prices changed, the workloads went down with no backup provider).

Over the last year, Akash has transitioned from focusing on CPU to GPU compute, taking advantage of this paradigm shift in computing infrastructure and the supply shortage.

Akash GPU Supply Side

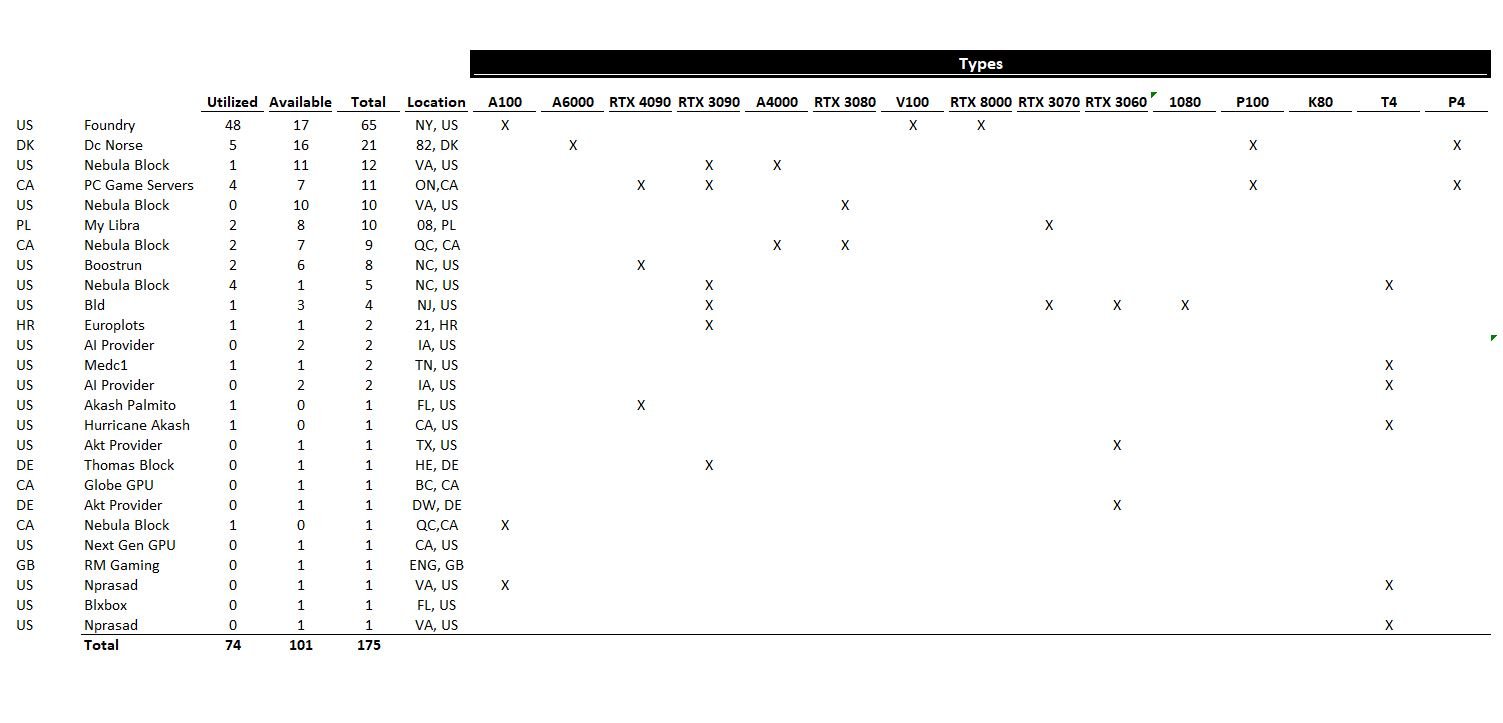

Akash’s GPU network went live on mainnet in September 2023. Since then, Akash has scaled to 150-200 GPUs reaching 50-70% utilization.

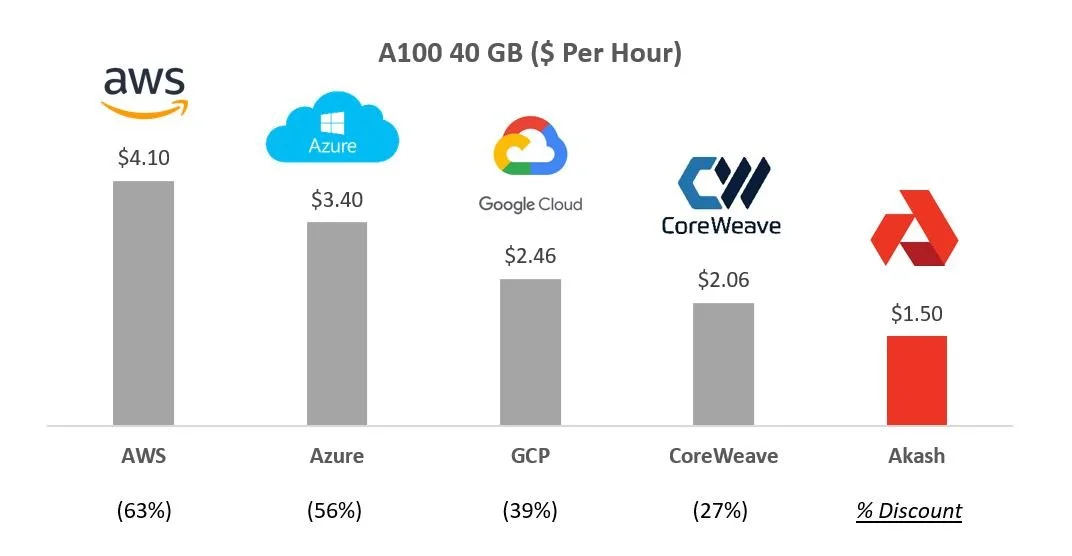

Below compares the price for Nvidia A100 with several of the leading providers and Akash is 30-60% cheaper than the competition.

Source: CoreWeave Pricing. Akash Pricing

There are around 19 unique providers on Akash network across 7 countries supplying over 15 types of chips. The largest provider is Foundry, which is a DCG backed company that also does crypto mining & staking.

Source: https://deploy.cloudmos.io/providers (as of January 17, 2024)

Akash has largely been focused on enterprise grade chips (A100s), which have been traditionally used to power AI-workloads. While they have also offered some consumer grade chips, those have been historically difficult to use for AI due to power, software and latency issues. There are several companies, such as FedML, io.net and Gensyn that are trying to build an orchestration layer that will allow for AI edge compute.

As more and more of the market moves to inference vs. training, consumer-grade GPUs could become more viable, but today the market is largely centered around training with enterprise grade chips.

Source: Lambda Labs GPU Benchmarks. Highlighted chips are available on Akash’s platform

On the supply side, Akash is focused on public hyperscalers, private GPU providers, crypto miners and enterprises that hold underutilized GPUs.

Public hyperscalers. The biggest unlock would be for the public hyperscalers (Azure, AWS, GCP) to allow their customers to resell unutilized capacity to the Akash marketplace. This would allow them to have revenue visibility to make their capital investments. Once one hyperscaler allows this, the others would likely need to follow to maintain competitive share. As mentioned earlier, hyperscalers will likely have a $50B IaaS opportunity, creating a large resale opportunity for Akash’s marketplace.

Private competitors. In addition to the public hyperscalers, there exists several private companies (CoreWeave, Lambda Labs, etc.) who also lease GPUs. Given the competitive dynamics with hyperscalers attempting to build their own ASICs as alternative hardware, Nvidia has shifted more of their supply to some of these private companies. The pricing at the private competitors are often cheaper than the hyperscalers (e.g. A100 up to 50% cheaper). CoreWeave, which is the most high-profile private competitor, was once a crypto mining company that pivoted to building data centers and offering GPU infrastructure in 2019. They are raising at a $7 billion valuation and is backed by Nvidia. CoreWeave is growing rapidly, generating $500M of revenue in 2023 and expecting $1.5-2B of revenue in 2024. CoreWeave has 45K Nvidia chips and estimates show that these private competitors could have a total of 100K+ GPUs. Enabling a secondary marketplace to their customer base could allow these private competitors to gain share versus the public hyperscalers.

Crypto miners. Crypto miners have historically been large consumers of Nvidia GPUs. Given the computational complexity of solving cryptographic proofs, GPUs had emerged to be the dominant hardware for Proof-of-Work networks. As Ethereum moved from Proof-of-Work to Proof-of-Stake, this created a lot of excess capacity. ~20% of the freed-up chips are estimated to be able to be repurposed for AI workloads. Furthermore, Bitcoin miners are also looking to diversify their revenue streams. Over the past few months, Hut 8, Applied Digital, Iris Energy, Hive and other Bitcoin miners have all announced AI/ML strategies. The largest supplier on Akash is Foundry, which is one of the largest Bitcoin miners.

Enterprises. As shown before, Meta has one of the largest stockpiles of GPUs with 15,000 A100s with 5% utilization. A Similarly, Telsa also holds 15,000 A100s. Enterprise compute utilization is typically <50%. Given the amount of venture funding into the space, a lot of AI/ML startups also have bought ahead of their chip consumption. Being able to resell unused capacity will reduce the total cost of ownership for these smaller companies. Interestingly enough, there is also a potential tax advantage of leasing old GPUs and tax harvesting the depreciation versus selling older chips outright.

Akash GPU Demand Side

For most of 2022 and 2023, prior to the launch of the GPU network, CPU GMV had been ~$50K annualized. Since the launch of the GPU network, GMV has reached $500K-1M annualized with 50-70% utilization of GPUs on the network.

Akash has been working on reducing user friction, improving user experience, and broadening use cases.

USDC Payments: Akash has recently allowed stable payments in USDC such that customers are no longer subject to the friction of buying AKT and the price volatility of holding AKT until payment.

Metamask Wallet Support: Akash has also implemented Metamask Snap for easier onboarding versus spinning up a Cosmos specific wallet.

Enterprise Level Support: Overclock Labs, the creators of the Akash Network, has launched AkashML, a frontend to make it easier to onboard users onto the Akash network with enterprise level support.

Self-Serve: Cloudmos, recently acquired by Akash, has also launched an easy-to-use self-serve interface for deploying GPUs. Previously, deployments had to be done through command line code.

Choice: While the focus has predominantly been on Nvidia enterprise-grade chips, Akash also offers consumer grade chips and, as of late 2023, added for support for AMD chips

Akash is also proving out use cases with the network. During GPU testnet, the community demonstrated that it could use the network to deploy and run inference on many popular AI models. Both the Akash Chat and Stable Diffusion XL applications showcase the ability of Akash to run inference. We believe that over time, the inference market will be significantly larger than the training market. Today, the cost of an AI powered search is $0.02 (10x higher than Google’s current cost). Given there are 3T searches a year, this would be $60B annually. To put that in context, the cost of training an OpenAI model was ~$100M. While costs will likely go down for both, this highlights the significant difference in revenue pools longer term.

Given most of the demand for high end chips today is on training, Akash is also currently working on showing they can use the Akash network to train a model, with a goal to launch the model by early 2024. After using homogenous chips from a single vendor, the next project will be to use heterogenous chips from multiple vendors.

The roadmap for Akash is robust. Some product features that are being worked on include secret management support, on-demand/reserved instances and better discoverability.

Tokenomic and Incentives

Akash charges 4% for payments in AKT and 20% in USDC. The 20% take rate is similar to what we have seen in traditional internet marketplaces (e.g. Uber 30%).

Akash has ~58% tokens circulating (225M circulating with 388M max supply). Inflation per year has been raised from 8% to 13%. 60% of the circulating tokens currently are staked, with a 21 day unbonding period.

Both 40% (formerly 25%) of the inflation and the take rate on GMV will also go into the community pool, which currently has $10M of AKT tokens.

The uses for these sources of capital are still be determined but will be split between public funding, provider incentives, staking, a potential burn and the community pool.

Source: Akash Github

On Jan 19, Akash launched a proposal for a $5M pilot incentive program to bring on 1,000 A100s onto the platform. Over time, the goal would be giving supply side revenue visibility (e.g. 95% effective utilization) for providers that onboard to the marketplace.

Valuation & Scenario Analysis

Below, we present a few scenarios and illustrative assumptions for Akash’s key drivers:

Near-Term Case: We estimate if Akash is able to get to 15,000 A100’s this would generate close to $150M of GMV. At a 20% take rate, that would be $30M of protocol fees to Akash. Given the growth trajectory at a 100x multiple (which takes into account the valuations for AI), that would be worth $3B outcome.

Base Case: We assume the IaaS market opportunity is in-line with Morgan Stanley’s estimates of $50B. Assuming 70% utilization, there is $15B of capacity to resell. Assuming this capacity is discounted by 30% yields $10B, with an additional $10B from other non-hyperscaler sources. Given marketplaces typically enjoy strong moats, we assume Akash is able to achieve 33% share (Airbnb 20% share of vacation rental market, Uber 75% share of ride hailing market, Doordash 65% share). At a 20% take rate, which is also in-line with internet marketplaces, this yields $1B of protocol fees. At a 10x multiple, Akash would be a $10B outcome.

Upside Case: Our upside case uses the same framework as our base case. We assume $20B resell opportunity due to being able to penetrate more unique sources of GPUs and higher share gain at 50%.

For context, Nvidia is a $1.2T public market cap company, while OpenAI was valued at $80B, Anthropic $20B and CoreWeave $7B in the private markets. Within crypto, Render and TAO are valued at $2B+ and $5.5B+, respectively.

Disclaimer: All forecasts, assumptions, and performance metrics are hypothetical

Risks & Mitigants

There are a few key risks we are actively monitoring for our investment in Akash:

Concentration of both supply and demand: Today, the majority of GPU demand is for training extremely large, complex LLMs by large tech companies (GPT4 had 1.5T parameters vs. GPT3 with 175B vs. GPT2 at 1.5B). The majority of supply is concentrated at the large public hyperscalers. Over time, we believe there will be more interest in training smaller AI models, which are cheaper and can better handle private data. Fine-tuning will become more and more important as models shift from general purpose to vertical specific. Lastly, inference will become more and more critical as usage and adoption accelerates.

Competition: There are a number of crypto and non-crypto companies that are trying to unlock underutilized GPUs. Some of the more notable crypto protocols:

Latency issues and technological challenges: Given training is a very resource intensive task, with all chips sitting in one data center, it is still unclear if you can train a model on a dispersed, non-collocated stack of GPUs. OpenAI’s next training facility is planned to have 75K+ GPUs in a singular location in Arizona. These are problems that orchestration layers such as FedML, Io.net and Gensyn are trying to solve.

Special thanks to Greg Osuri (Co-Founder, Akash), Arthur Cheong & Bryan Tan (DeFiance) and Zach Ross (Foundry) for their review and input.

[1] All forecasts and assumptions are hypothetical. See “Valuation & Scenario Analysis” section for details

LEGAL DISCLAIMERS

THIS POST IS FOR INFORMATIONAL PURPOSES ONLY AND SHOULD NOT BE RELIED UPON AS INVESTMENT ADVICE. This post has been prepared by Modular Capital Investments, LLC (“Modular Capital”) and is not intended to be (and may not be relied on in any manner as) legal, tax, investment, accounting or other advice or as an offer to sell or a solicitation of an offer to buy any securities of any investment product or any investment advisory service. The information contained in this post is superseded by, and is qualified in its entirety by, such offering materials.

THIS POST IS NOT A RECOMMENDATION FOR ANY SECURITY OR INVESTMENT. References to any portfolio investment are intended to illustrate the application of Modular Capital’s investment process only and should not be used as the basis for making any decision about purchasing, holding or selling any securities. Nothing herein should be interpreted or used in any manner as investment advice. The information provided about these portfolio investments is intended to be illustrative and it is not intended to be used as an indication of the current or future performance of Modular Capital’s portfolio investments.

AN INVESTMENT IN A FUND ENTAILS A HIGH DEGREE OF RISK, INCLUDING THE RIKS OF LOSS. There is no assurance that a Fund’s investment objective will be achieved or that investors will receive a return on their capital. Investors must read and understand all the risks described in a Fund’s final confidential private placement memorandum and/or the related subscription posts before making a commitment. The recipient also must consult its own legal, accounting and tax advisors as to the legal, business, tax and related matters concerning the information contained in this post to make an independent determination and consequences of a potential investment in a Fund, including US federal, state, local and non-US tax consequences.

PAST PERFORMANCE IS NOT INDICATIVE OF FUTURE RESULTS OR A GUARANTEE OF FUTURE RETURNS. The performance of any portfolio investments discussed in this post is not necessarily indicative of future performance, and you should not assume that investments in the future will be profitable or will equal the performance of past portfolio investments. Investors should consider the content of this post in conjunction with investment fund quarterly reports, financial statements and other disclosures regarding the valuations and performance of the specific investments discussed herein. Unless otherwise noted, performance is unaudited.

DO NOT RELY ON ANY OPINIONS, PREDICTIONS, PROJECTIONS OR FORWARD-LOOKING STATEMENTS CONTAINED HEREIN. Certain information contained in this post constitutes “forward-looking statements” that are inherently unreliable and actual events or results may differ materially from those reflected or contemplated herein. Modular Capital does not make any assurance as to the accuracy of those predictions or forward-looking statements. Modular Capital expressly disclaims any obligation or undertaking to update or revise any such forward-looking statements. The views and opinions expressed herein are those of Modular Capital as of the date hereof and are subject to change based on prevailing market and economic conditions and will not be updated or supplemented. Any projections, estimates, forecasts, targets, prospects, and/or opinions expressed in this blog are subject to change without notice and may differ or be contrary to opinions expressed by others.

EXTERNAL SOURCES. Certain information contained herein has been obtained from third-party sources. Although Modular Capital believes the information from such sources to be reliable, Modular Capital makes no representation as to its accuracy or completeness. This post may contain links to third-party websites (“External Sites”). The existence of any such link does not constitute an endorsement of such websites, the content of the websites, or the operators of the websites. These links are provided solely as a convenience to you and not as an endorsement by us of the content on such External Sites.